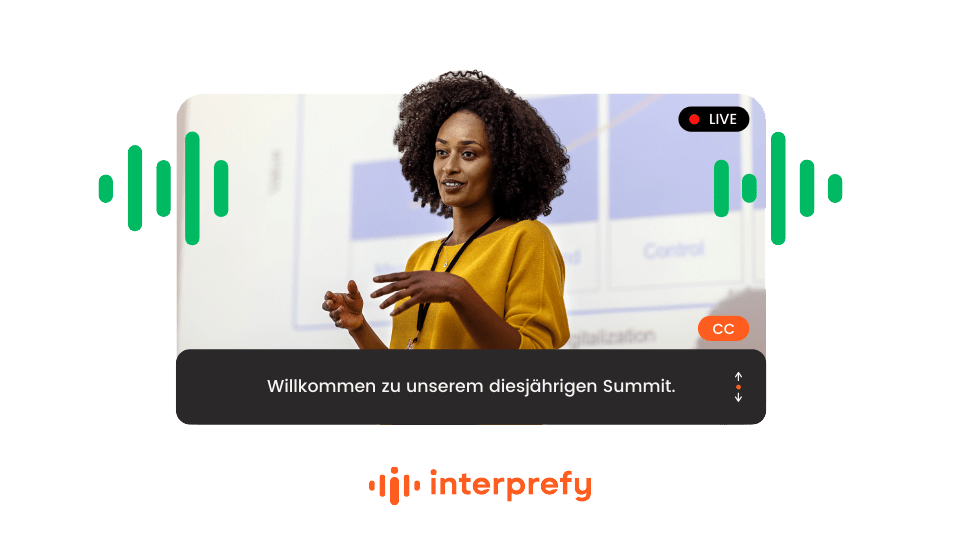

Enterprise-Grade, AI-Powered Live Captions

Deliver clear, real-time captions with Interprefy’s powerful enterprise AI technology. Our closed captions and subtitles transcribe spoken content instantly — either in the original language or translated — making events and meetings more accessible, inclusive, and compliant for global audiences.

AI Closed Captions & Subtitles

What Are They?

Interprefy AI-powered closed captions and subtitles provide enterprise-level real-time transcription and translation of spoken content during live events and meetings. Closed captions display the spoken words as text in the original language, making content accessible for participants who are hard of hearing or prefer to read along. Subtitles offer translated text, helping multilingual audiences follow the conversation in their own language. Designed for speed and accuracy, these AI-powered features make it easy to deliver inclusive and compliant events at scale.

Introducing

Interprefy Agent

We’ve built Interprefy Agent to bring enterprise-grade multilingual support directly into your online meetings. It connects smoothly with major platforms — Microsoft Teams, Zoom, Google Meet, and Webex. There’s no kit to install, no virtual cables or complex audio routing required. Just invite Interprefy Agent like you would any other participant, and it takes care of the rest streaming your meeting audio to Interprefy and delivering live translation and captions in over 80 languages.

.webp)

Closed Captions

AI-powered real-time speech-to-text transcription

Effortlessly transcribe spoken language in its original form, instantly and in real time with enterprise-grade speech-to-text technology providing outstanding accuracy in more than 80 languages.

Perfect for events where accessibility, clarity, and compliance are key.

Subtitles

AI-powered real-time speech-to-translated-text

Experience real-time translation of spoken content and enterprise-level multilingual accessibility with the combined power of speech-to-text and machine translation. Deliver live translated subtitles in more than 80 languages, helping you reach multilingual audiences with ease.

Enterprise-Ready for Every Event Format

In-Person Events

Enhance your in-person multilingual event experience with simultaneous interpretation, live speech translation and captions integrated into your AV setup.

Online Meetings & Events

Host high-quality meetings in multiple languages with our enterprise-grade technology that can integrate with any meeting platform.

Hybrid Events

Bring on-site and remote participants together with enterprise-level live translation and interpretation technology, supported end-to-end by our experts.

Broadcasting

Make your live broadcasts easy to follow in any language. We add real-time interpretation and captions, so everyone stays connected.

How It Works

Audio Input

Speech from your event — whether from a live speaker on-site or in a virtual meeting — is securely captured and streamed in real time.

AI Transcription & Translation

Using advanced automatic speech recognition and machine translation, spoken content is transcribed and (optionally) translated into 80+ languages with speed and precision.

Live Display

Captions and subtitles are delivered instantly to participants via the Interprefy web platform or mobile app — boosting accessibility, comprehension, and audience engagement.

Discover the Power of Interprefy Live Captions

Discover Interprefy Now

Interprefy Now leverages our enterprise-grade AI speech translation technology to deliver live translation and captions instantly. It transforms in-person, hybrid, or online meetings into secure multilingual experiences, giving instant access to over 80 languages from any mobile device — with no extra hardware, setup, or configuration required.

Explore AI Languages

Interprefy AI enables automatic, real-time speech translation across a vast network of languages, offering more than 6,000 language pair combinations. Explore the full list of available "to" and "from" languages to see how you can connect your speakers and audiences worldwide without barriers.

Why Choose Interprefy Closed Captions & Subtitles?

Interprefy’s AI-powered captioning and subtitling solutions help organisations make their events more inclusive, compliant, and globally accessible — without compromising quality or performance. Whether your event is online, onsite, or hybrid, Interprefy ensures every participant can read, understand, and engage — regardless of language or hearing ability.

ENSURE INCLUSIVE PARTICIPATION

ACCURATE AND REAL-TIME

MULTILINGUAL SUPPORT

SEAMLESSLY INTEGRATED

CUSTOMISABLE FOR YOUR EVENT

SUPPORTS COMPLIANCE WITH ACCESSIBILITY REGULATIONS

The Interprefy Difference

Live Captions at the European Film Awards

Explore how Interprefy’s enterprise-grade live captions and subtitles make your events more accessible and inclusive — delivering real-time closed captions and subtitles that enhance understanding and engagement for global audiences.

Frequently Asked Questions (FAQs)

Live captions and subtitles are ideal for organisations that prioritise accessibility, inclusion, and global reach. They ensure equal access for deaf or hard-of-hearing participants, support non-native speakers, and make content easier to follow in noisy environments or across diverse audiences. Beyond enhancing engagement, Interprefy’s captioning solutions help organisations meet accessibility and compliance requirements, including EAA or WCAG and ADA standards for digital events. Our captions and subtitles can be added to webinars, conferences, hybrid events, or live streams — making every event more inclusive and compliant by design.

Unlike other providers, Interprefy’s live captioning and subtitling solution is purpose-built for professional events, combining advanced AI speech recognition with robust event infrastructure for exceptional accuracy and real-time performance. Captions and subtitles can be displayed anywhere your audience needs them — on event screens, laptops, or mobile devices — making content accessible and easy to follow for every participant. Our custom vocabulary feature ensures event-specific terms, acronyms, and speaker names are captured accurately, while 24/7 expert technical support and project management guarantee smooth delivery from start to finish. Interprefy also offers post-event media services, such as adding captions to recordings for on-demand content, extending accessibility long after the event ends.

Interprefy’s live captions deliver exceptional accuracy, powered by advanced AI speech recognition and refined for live event conditions. Under optimal audio settings, accuracy levels can achieve over 90% accuracy, as measured by the industry-standard Word Error Rate (WER). Real-world performance depends on factors such as sound quality, accent, and background noise. To maximise precision, organisers can use Interprefy’s custom vocabulary feature and benefit from expert event support before and during sessions. Together, these tools ensure captions remain clear, consistent, and accessible across any event.

Yes. Interprefy’s live captions and multilingual subtitles can be added to any event format—whether on-site, hybrid, virtual, or fully live-streamed. Captions can be embedded directly into your event platform, live stream, or displayed on venue screens, ensuring every participant can follow along in real time. Our solution integrates easily with leading event and streaming platforms such as Zoom, Microsoft Teams, and YouTube Live, offering flexibility and accessibility without complex setup. With expert technical support and custom configuration, Interprefy ensures your captions run smoothly across all channels.

Interprefy’s live captions and subtitles are AI-generated, making them a highly cost-effective solution for adding real-time accessibility to any event. Pricing depends on factors such as event duration, number of languages, and streaming or platform requirements. Because there are no interpreter or transcriptionist costs, organisers can scale multilingual access across more sessions and audiences without exceeding their budgets. Interprefy’s flexible pricing options make inclusive, multilingual events more affordable than ever.

Let’s Plan Your Next Multilingual Event

Bring the magic of Interprefy's enterprise AI captions and subtitles to your upcoming conference or event. Our team is ready to assist you every step of the way.